Waifu2x Extension GUI is a graphical user interface for upscaling images or perform super-resolution. However, it is not just a generic upscaler, it uses deep convolutional neuro networks to upscale images. It is free and open source so anyone can utilize it. Unlike conventional methods of enlarging an image using old and simple algorithms, Waifu2x Extension GUI is integrated with multiple neuro networks that each has their own advantages and are all miles better than conventional algorithms. Some of the neuro networks that Waifu2x Extension GUI supports are waifu2x, Real-CUGAN, Real-ESRGAN.

Waifu2x produces good quality upscaled images for its age but in my opinion, it has been superseded by some newer neuro networks. Real-CUGAN is very fast and produces high quality and sharpest images, but it only supports super-resolution of 2D anime style images unlike the other two neuro networks mentioned here. Real-ESRGAN works well for both 2D and 3D images. Though the quality of the result is very high, it also takes much longer to upscale an image compared to Real-CUGAN.

I highly recommend you try it out. It’s free and open source, it also receives updates fairly frequently. The website to the GitHub project: https://github.com/AaronFeng753/Waifu2x-Extension-GUI

Here are some pictures for comparison between the few neuro networks that I briefly introduced. The original image is only 344 by 346 pixels. It’s upscaled by 4 times, which is 16 times the number of pixels. It’s really incredible that the neuro networks are able to fill in such a large portion of additional pixels based on the original image while enhancing the quality and sharpness.

Image Generation: Stable Diffusion

Stable Diffusion is an AI image generation tool I discovered in late 2022 that really fascinated me. Its working principles are kind of abstract, but I tried my best to understand how it works. Here’s my own understanding: Imagine there is an image, and slowly, step by step, a little bit of noise is added to it, until it becomes unrecognizable and basically comprises of noise only. Now, how Stable Diffusion works is essentially the process described above, but in reverse. First, there is an image completely composed of noise, then step by step, it is denoised until a clear, perfectly recognizable image is left as the output. Crazy right? I thought so too.

With Stable Diffusion, you can change the input prompt or tweak multiple different parameters to get diverse and unique results. In addition, there are tools to further enable you to get full control over the generated image. One of those is ControlNet. ControlNet allows for the creation of images based on a predefined control network that is customized by the user. The introduction of ControlNet grants us unprecedented control over image generation which ensures that the generated image will not deviate more than desired from the initial control network.

Links to the corresponding GitHub projects:

Stable Diffusion: https://github.com/AUTOMATIC1111/stable-diffusion-webui

ControlNet: https://github.com/lllyasviel/ControlNet

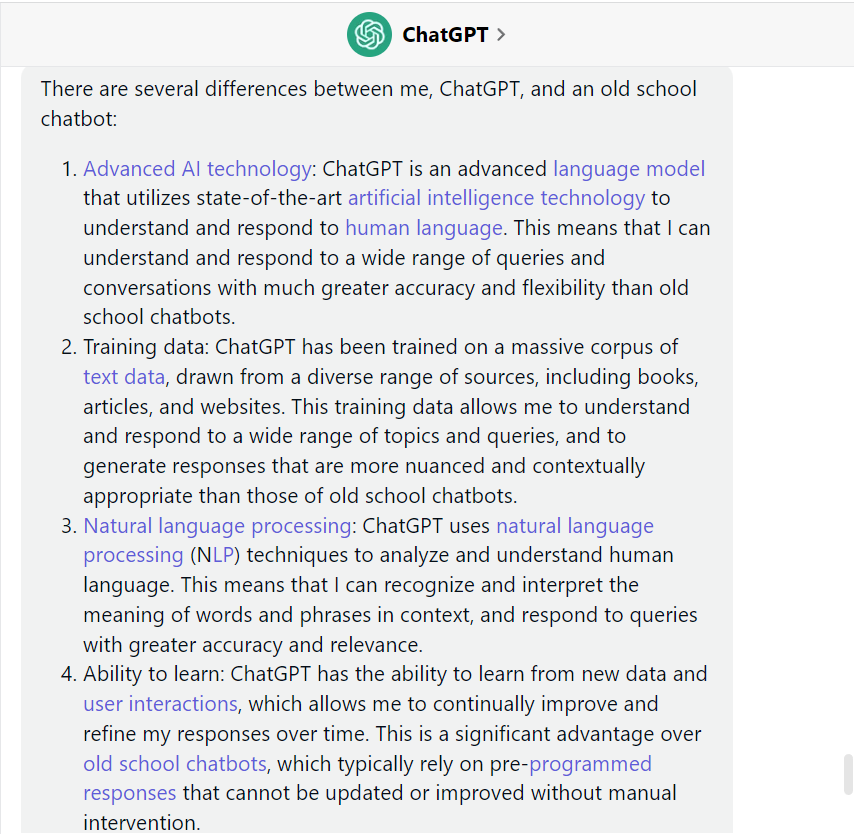

Chatbot: ChatGPT, New Bing

Voice Generation: VITS/DiffSinger

Placeholder

AI Vtuber: Neuro-sama (Twitch: vedal987)

Last updated: 2024/01/19